When it comes to the events, the big debate is about the contents of its body. Martin Fowler has written a great post on this topic.

Some devs argue that events should carry the complete load with it, I am calling them Fat Events (Event Carried State Transfer) in this blog. And then we have others who believe that events should be lightweight and containing minimum details, hence I call them Thin Events (Event Notification). In Thin Events, subscriber of the event is expected to request the required data after receiving the event.

Like every other dilemma in choosing suitable design patterns, this is a hard question to answer and it depends on various things. Lets compare them:

- Thin Events add coupling which is one of concerns we wanted to address by using events. It forces the event subscriber to call the publisher APIs to get more details. This means a subscriber cannot do its job if the publisher is down. In case of a bulk execution, it can adversely impact the APIs it will be calling. In contrast, Fat Events remove that dependency and lead to a better decoupling of the systems.

- The data in an event could be outdated by the time it is processed. This impacts both sides in quite opposite ways. Thin Events shine when the real-time information is crucial at the time of processing while Fat Events work better where sequential or point-in-time processing is required.

- Deciding the contents for an event is the part where Thin Events win, simply because it will only contain the bare minimum details for the subscriber to call back if required. But for Fat Events, we will have to think about the event body. We want to carry enough for all the subscribers but it comes at an expense: the publisher model would be coupled to the contract. It also adds an unnecessary dependency to consider in case you want to remove some data from the domain.

Thin Events do not cut it for me (most of the time)

From my experience so far, I think Thin Events does not offer anything that Fat Events cannot. As with Fat Events, the subscriber can also choose to call back the API if needed for any reason. In fact, calling back the API feels like a purpose-defeat for eventing implementation as it introduces temporal coupling. However, in certain circumstances the use case may not require (e.g. notifications to thick clients) or allow putting the payload on the events if the published on uncontrolled/unprotected or low bandwidth infrastructure. In those cases an event can carry a reference (URI) back to the change (e.g. resource, entity or event with version).

So are Fat Events the answer?

It depends, though carrying the complete object graph with every event is not a good idea. Loosely coupled bounded contexts are meant to be highly cohesive to act as a whole, so when you create event of a domain model, the question is how far you go in the object graph i.e. to an aggregate, or a bounded contexts or includes entities outside bounded context as well. We have to be very careful, as noticed above, it tightly couple the event contents to your domain model. So we don’t want thin events then how fat our event should be?

There are two further options, event body based on the event purpose (Delta Event) or the aggregate it represents (Aggregate Event).

Delta Events

So in Delta Events, the contents can consist of:

I am not sure if ‘Delta Events’ is a known term for this, but it is the best name I could think of to describe the event contents. The basic concept is to make events carry ‘just enough details’ to describe the change in addition to the identity (Id) of the entities changed. Delta events work even better with the Event Sourcing because they are like a log of what has happened, which is the basic foundation of the Event Sourcing. However, as an integration channel across the bounded contexts, delta events can add the overhead of maintaining multiple schemas and domain coupling when consumers need to get the complete state by stitching together the state by events.

- Public Id of the primary entity, that event is broadcast for.

- Fields that have changed in the event.

e.g. AccountDebited

{

AccountHolderUserId: <Account holder Id>

FromAccountNumber: "<Account Number that is debited from>"

ToAccountNumber: "<Account Number that money is credited to>"

Description: "Description of transaction"

AmountDebited: "<Amount that is debited from the account>"

Balance: "<Balance remaining after this transaction>"

TransactionId: "<To correlate with the parent transaction>"

}

The event above carries the complete details to explain what has happened along with the public Id of the entities involved.

Aggregate Event

We can make it a Fat Event and carry the additional content such as the Account holder name for the systems which may need that information e.g. notification, reporting, etc. But unfortunately, that will couple publisher domain unnecessarily to that data it does not need. So to work out the right content for the event, we will apply two key principles:

Domain-Driven Design: Scope the event body to the aggregate level. As Martin Fowlers explains in his post, aggregate is the smallest unit of consistency in Domain-Driven Design.

A DDD aggregate is a cluster of domain objects that can be treated as a single unit.

Data On The Outside: Events are an external interface of our system. So they fall into the “Data On the Outside” category explained in an excellent paper “Data on the Outside versus Data on the Inside” by Pet Helland. Applying the constraints of sharing data outside the bounded context, we will send a complete snapshot of the aggregate, including the fields that didn’t change as part of the event. This allows the consumer to keep the point-in-time reference of the state. I have unpacked this concept in my other post: Events on the outside vs Events on the inside

By following these principles, the body of the event contains the model for the aggregate (i.e. transaction) and a sequence number to highlight the point in time reference to the state of the aggregate.

e.g. AccountDebited

{

Sequence: Timestamp / Sequence Number,

Transaction: {

TransactionId: "<To correlate with the parent transaction>",

FromAccountNumber: "<Account Number that is debited from>",

ToAccountNumber: "<Account Number that money is credited to>",

Description: "Description of transaction",

Amount: "<Amount that is debited from the account>",

Balance: "<Balance remaining after this transaction>"

}

}

How does the subscriber get the missing pieces?

In both above event types, there can be some related information that is not present in the event body e.g. references to entities of other bounded context such as account holder name. It depends on the situation, for a new subscriber you may want to listen to the other events in the system and build a database of the subscriber’s domain.

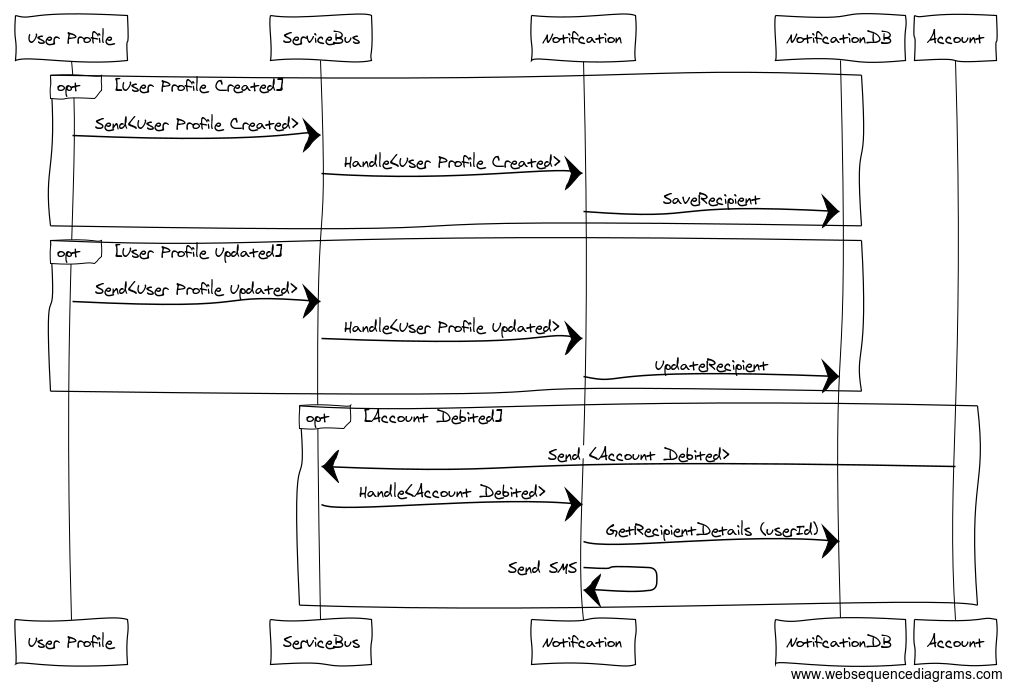

Let’s assume we have a Notification domain that will send an SMS to the account holder whose account will be debited if the amount to debit is above $100.00. SMS body would be like:

Hi <First Name> <Last Name>,

Your account number <account number> is debited. Transaction details:

Amount: $<amount>

Remaining Balance: $<remaining balance>

Description: $:<Transaction description>

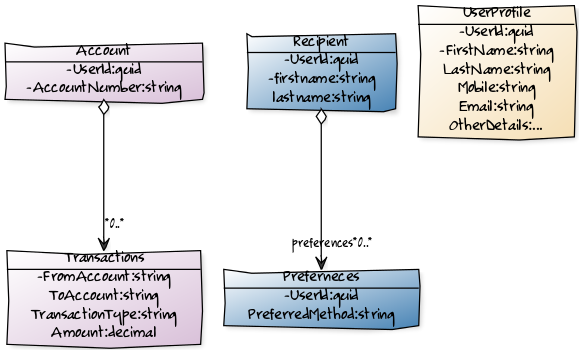

For the sake of an example, lets assume our system is cut into the following sub domains:

- User Profile: Maintains account holder details such as Name, Address, Mobile Number and Email etc.

- Notification: Maintain user preferences about receiving notifications and sending notifications.

- Accounts: Maintain ledger of debits and credits of accounts

To send the SMS, we have almost all the details in the event body except the account holder name and mobile number of the recipient. In our imaginary system, it is the user profile domain that has those details instead of the accounting domain, that is broadcasting the event. There are two things that can happen here:

Notification domain can call the User Profile to get more details before sending the SMS. So now we always get the up to date contact details of the user but at the cost of run time dependency. If User Profile system is down for any reason, it would break the notification system as well.

OR

We can make the notification system listen to the events from the User Profile system as well to maintain a local database of its recipients (translated from account holders) along with their notification preferences. e.g.

- UserProfileAdded

- UserProfileUpdated

I prefer the later option to keep the system decoupled and avoid run time dependency. The workflow will be like this:

The subscriber will build the store as they go, but still there can be scenarios where they don’t have this data:

- New Subscriber

- An event lost for some reason – not sent, not delivered, delivered but failed etc.

For the first scenario you can start with some data migration to give it a seed data, but for the second case data migration may not work. I have dealt with this situation by introducing a snapshot builder.

Snapshot Builder:

If subscriber does not know about the entity it has received in the event, it would call the relevant systems and build a snapshot. This can be quite handy in scenarios where occasionally the subscriber needs to sync its data (translated from other domains) with the original owner of the data.

I hope you find this post useful, if not the complete approach, it may give you some options to consider when thinking about the event contents.

I face a situation where a fat event can be problematic: unit testing

1 let’s say somewhere developer1 fire a fat event (the whole information of an aggregate), at the beginning, there is only one subscriber that need 5 out 10 fields of the aggregate, so in the UT of the publisher, I only test the 5 fields

2 later another subscriber came and need another 3 fields, in the UT of the new subscriber, the new developer(deveoper2) manually construct and fire the event, setting only the 3 fields that he needs

3 sometime later, yet another developer(developer3) modified the original publisher and changed/remove one of the 3 fields that developer2 needed, at this time no test will fail(both the publisher and the 2 subscribers), but the function is not working now

LikeLike

Thanks for your comment. If I am reading properly you are highlighting the problem of managing breaking changes. Event schema should be managed via same engineering practices as we do for other contracts like API e.g. versioning, schema registry etc.

Publishers contract testing should not rely on the consumer’s consumption so publishers tests should provide coverage of entire schema.

LikeLike

From this description, it sounds like developer2 never actually wrote a unit test which tested all the relevant units, i.e., the dispatching of the event.

You don’t need 2 developers or interface versioning to run into that problem. Developer2 could simply have fat-fingered the code which dispatched the event, and the hypothetical unit test would have passed, while the actual code did nothing at all.

For that matter, your scenario isn’t even about events. If you practice TDD and depend only on failing tests to catch all possible bugs, then any possible code path which isn’t fully tested is a potential catastrophe.

LikeLike

[…] Events: Fat or Thin? (codesimple.blog) […]

LikeLike